IYKYK. Now it’s more like Me, grep and Grok.

Code Fun Blog Posts

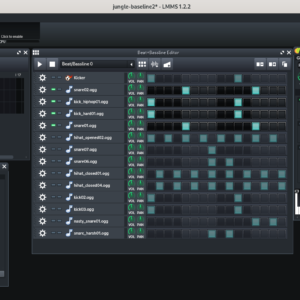

LMMS Channel Separator

Problem: I want to play 2 different instruments from my midi keyboard BUT! … My Midi doesn’t support output to different channels in any sort of split style! So I had Grok write a javascript page separating my keyboard below Middle C (Channel 3) and Middle C and up (Channel 4). https://web.develevation.com/midi-channler Now, I can […]

Roblox Sound Effects Heard by you, but not by Opponents

So I created a Roblox game. I added a gun. The gun works quite well. It shoots people and kills them just like a gun should do. When I shoot my gun, I hear it just fine. But when others shoot me in the game, I don’t hear them. My guns sounds don’t appear to […]

Notes For – Building makemore Part 5: Building a WaveNet

makemore: part 5¶ In [1]: import torch import torch.nn.functional as F import matplotlib.pyplot as plt # for making figures %matplotlib inline In [2]: # read in all the words words = open('names.txt', 'r').read().splitlines() print(len(words)) print(max(len(w) for w in words)) print(words[:8]) 32033 15 ['emma', 'olivia', 'ava', 'isabella', 'sophia', 'charlotte', 'mia', 'amelia'] In [3]: # build the vocabulary of characters […]

Training Tesla Stock

Credit to Rodolfo Saldanha’s Stock Price Prediction with PyTorch. I just changed this from Amazon to Tesla. In [2]: # This Python 3 environment comes with many helpful analytics libraries installed # It is defined by the kaggle/python Docker image: https://github.com/kaggle/docker-python # For example, here’s several helpful packages to load import numpy as np # linear […]

Notes for – Building makemore Part 3: Activations & Gradients, BatchNorm

Building makemore Part 3 Jupyter Notebook makemore: part 3¶ Recurring Neural Networks RNNs are not as easily optimizable with first order gradient techniques we have available to us and the key to understanding why they are not optimizable easily is to understand the activations and their gradients and how they behave during training. In [2]: import […]

Notes for – Building makemore Part 2: MLP

Attempting to scale normalized probability counts grows exponentially You can use Multi Layer Perceptrons (MLPs) as a solution to maximize the log-likelihood of the training data. MLPs let you make predictions by embedding words close togehter in a space such that knowledge transfer of interchangability can occur with good confidence. With a vocabulary of 17000 […]

Notes for — The spelled-out intro to language modeling: building makemore

Derived from: https://github.com/karpathy/nn-zero-to-hero/blob/master/lectures/makemore/makemore_part1_bigrams.ipynb Makemore Purpose: to make more of examples you give it. Ie: names training makemore on names will make unique sounding names this dataset will be used to train a character level language model modelling sequence of characters and able to predict next character in a sequence makemore implements a services of language […]