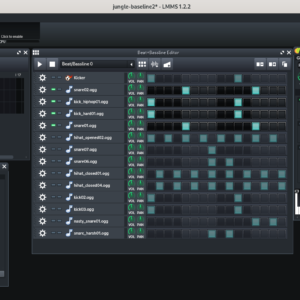

Problem: I want to play 2 different instruments from my midi keyboard BUT! … My Midi doesn’t support output to different channels in any sort of split style! So I had Grok write a javascript page separating my keyboard below Middle C (Channel 3) and Middle C and up (Channel 4). https://web.develevation.com/midi-channler Now, I can […]